In questa recensione delle capsule Dolce Gusto esamineremo una per una le varianti degustate, originali e compatibili. Sarà nostra cura tenerla aggiornata con nuovi assaggi.

In questa recensione delle capsule Dolce Gusto esamineremo una per una le varianti degustate, originali e compatibili. Sarà nostra cura tenerla aggiornata con nuovi assaggi.

Altra macchina per il caffè, altro giro! È il turno della recensione della Dolce Gusto Nescafé De’Longhi Genio S Plus Conte Marchese Rita Maria, per gli amici: “la Dolce Gusto”. Pensata soprattutto per le bevande, usiamo la Genio S Plus da circa sei mesi e i tempi sono maturi per parlarne a fondo.

La recensione delle capsule Bialetti si basa sulla nostra esperienza col modello Gioia. Continueremo ad aggiornarla in futuro quando usciranno nuove varietà originali e se inciamperemo in qualche compatibile valida.

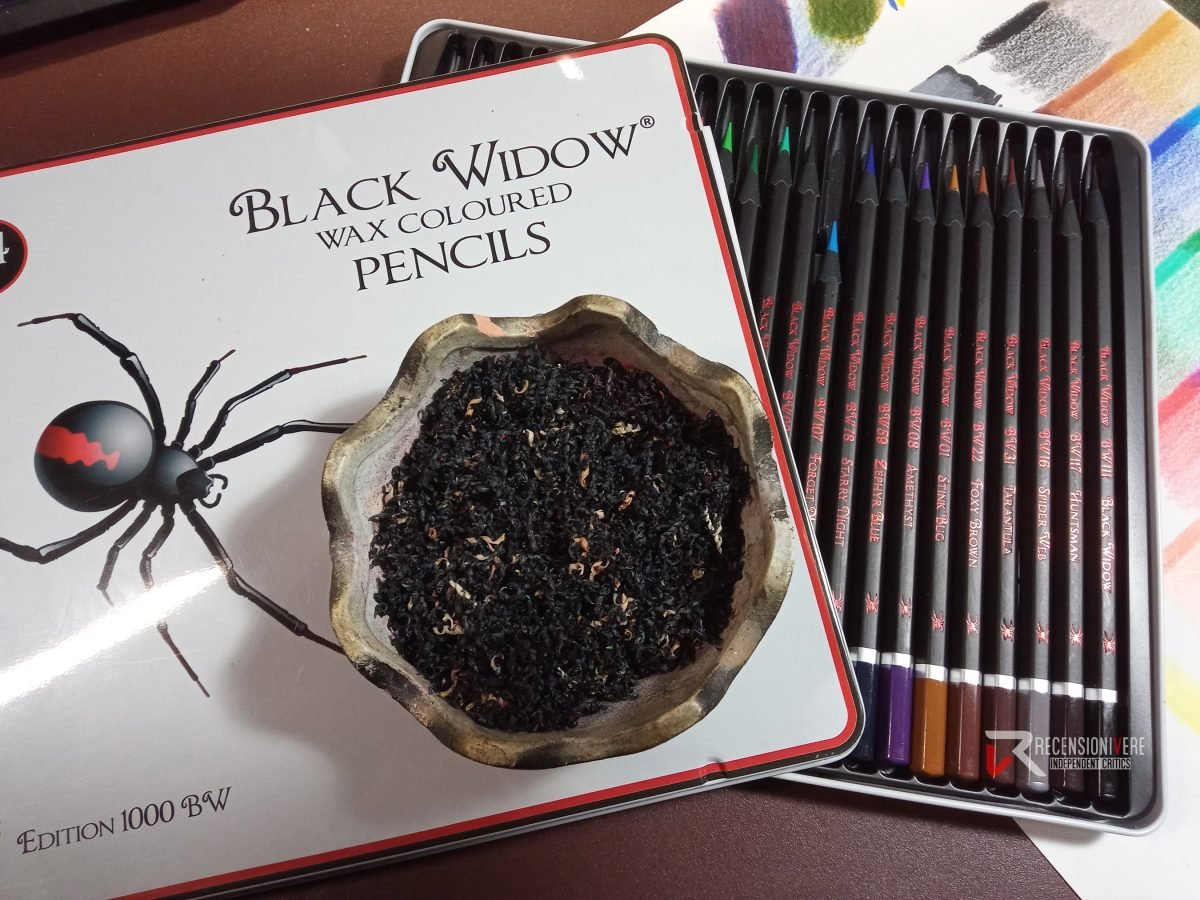

La recensione delle matite colorate Black Widow inaugura una serie che abbiamo sviluppato negli ultimi sei mesi, basandoci su test semplici, oggettivi e replicabili da chiunque. Le matite Black Widow sono rinomate nel mondo dell’adult coloring, riconosciute per la loro qualità. Non sono tra le opzioni più economiche disponibili sul mercato, ma sono diventate una […]

La macchina per il sottovuoto ADLER AD 4503 (4484 in versione nera) è un prodotto che circola dall’anno scorso e che si trova in offerta anche in alcuni negozi fisici. Vale la pena condividere con voi la nostra esperienza con questa recensione.

Eccoci finalmente con l’annunciata e attesa recensione della macchina per caffè Bialetti Gioia. Come era intuibile dai video pubblicati sul nostro canale Youtube, stiamo usando questa macchinetta da 4 mesi ed è giunto il momento di riportare la nostra esperienza.

La recensione della macchina per il caffè Nespresso Inissia era ormai nell’aria da qualche tempo e finalmente la pubblichiamo. Acquistata ormai oltre tre anni fa, ha prodotto migliaia di caffè, di tutte le marche, e possiamo svelarne pregi e difetti nei dettagli!

La recensione del fissativo spray Sennelier d’Artigny, per quanto breve, era praticamente obbligatoria. Si consiglia molto per i pastelli a olio ma poi non si trova quasi nulla sull’efficacia, a parte pochissimi – super discutibili – video. Su Amazon è chiamato Sprayfix, per questo potrebbe scapparci questo termine qui, come nelle foto e in qualche […]

Le Meliconi HP Comfort sono tra le cuffie TV senza fili più vendute e spesso tra le più economiche tra quelle di marchi noti. Noi le usiamo da quasi due anni e la recensione ci è parsa obbligatoria.

Russell Hobbs in Italia (in Europa?) distribuisce una vasta gamma di macchine per il caffè americano, tutte somiglianti. Abbiamo provato a lungo il modello Inspire e abbiamo qualche riflessione da condividere.